April 29, 2025

The Beginning

So in the technology industry, there's a meme or practice where you do an abbreviation based on the first letter, the last letter, and then the number of letters in the middle - like accessibility becomes a11y and Andreessen Horowitz is a16z and minimal engineering becomes m16g. I was typing in Slack and I abbreviated the word fucking and I just felt compelled - well actually, I was reminded of the way that we abbreviate things in the technology industry, and I just felt compelled to look for domains. So I happened upon F5G.pro and was like "oh man I gotta buy it" so I bought it.

I was kind of sitting and staring at the $25 I just spent, like "what am I gonna do with this?" Well, OK there's all this AI stuff that's happening. Cursor is happening. Let me use an LLM to completely do what I'm going to do, and I set a goal for myself of not typing anything from there. What I did is I initialized a repository on my local machine so I did type that in my terminal. I had already been authenticated with GitHub because of my environment variables, so I opened Cursor, launched the composer window and turned on dictation on my computer and I started talking to it essentially.

I told it the website that I wanted to create, that I wanted it to be hosted on S3 with CloudFront in front of it. I wanted to create scripts on my local machine that I could execute using the AWS CLI that would create all of the services that I needed and other configuration that I needed on AWS. I told it "sometimes you just need a fucking pro" as the content that I wanted to have on my website. From there I reiterated a little bit on getting the scripts to work and it created the repository for me and then pushed it.

Then I realized well, I needed to have CI/CD set up and so we got that set up. It was kind of crazy too because I asked it like, what were the instructions that I needed to follow to set up what I needed to set up on AWS, and so I followed the instructions manually. I went to AWS and I set up the new IAM user and then created the token for that, and then I told it "hey apply these things as secrets in my GitHub repository" and so it used the command line tools that were available to set up the secret. And then it was opening pull requests and merging the pull requests and doing all that. I just sat and talked to the robot for about 30-45 minutes. Maybe it was an hour, and I had a website that was published to the Internet.

Kind of wild, but that's the world we're living in. I'd really hope to be able to publish the chat transcript between me and Cursor, but I don't know how to extract that content from the Cursor database anymore. So if you know how to do it that doesn't require me installing some sketchy extension in the IDE, let me know. I would love to be able to publish the chat transcript. That was the starting point. I'm gonna share the scripts that I created with the LLM as part of this, so that's my first entry.

April 30, 2025

The Journey Continues

Today I'm continuing to build this site with the help of AI assistants. We're working in small increments, creating branches for each change, and using pull requests to merge everything in a systematic way.

This approach lets me document the journey of creating this site while demonstrating good development practices. Each new entry becomes another small piece of the performance.

It's interesting to reflect on how AI has changed my workflow. What used to require writing code directly now involves a conversation, where I describe what I want and collaborate with the AI to implement it. The process feels more natural and fluid, yet still maintains the discipline of proper version control and CI/CD practices.

So all the text above was completely generated by the robot and it got ahead of itself. It asked to start a new entry and it created the branch, wrote that, committed it, pushed it, merged the PR and all before I was ready to start talking and telling you what to write, so here goes with what I actually wanna say today.

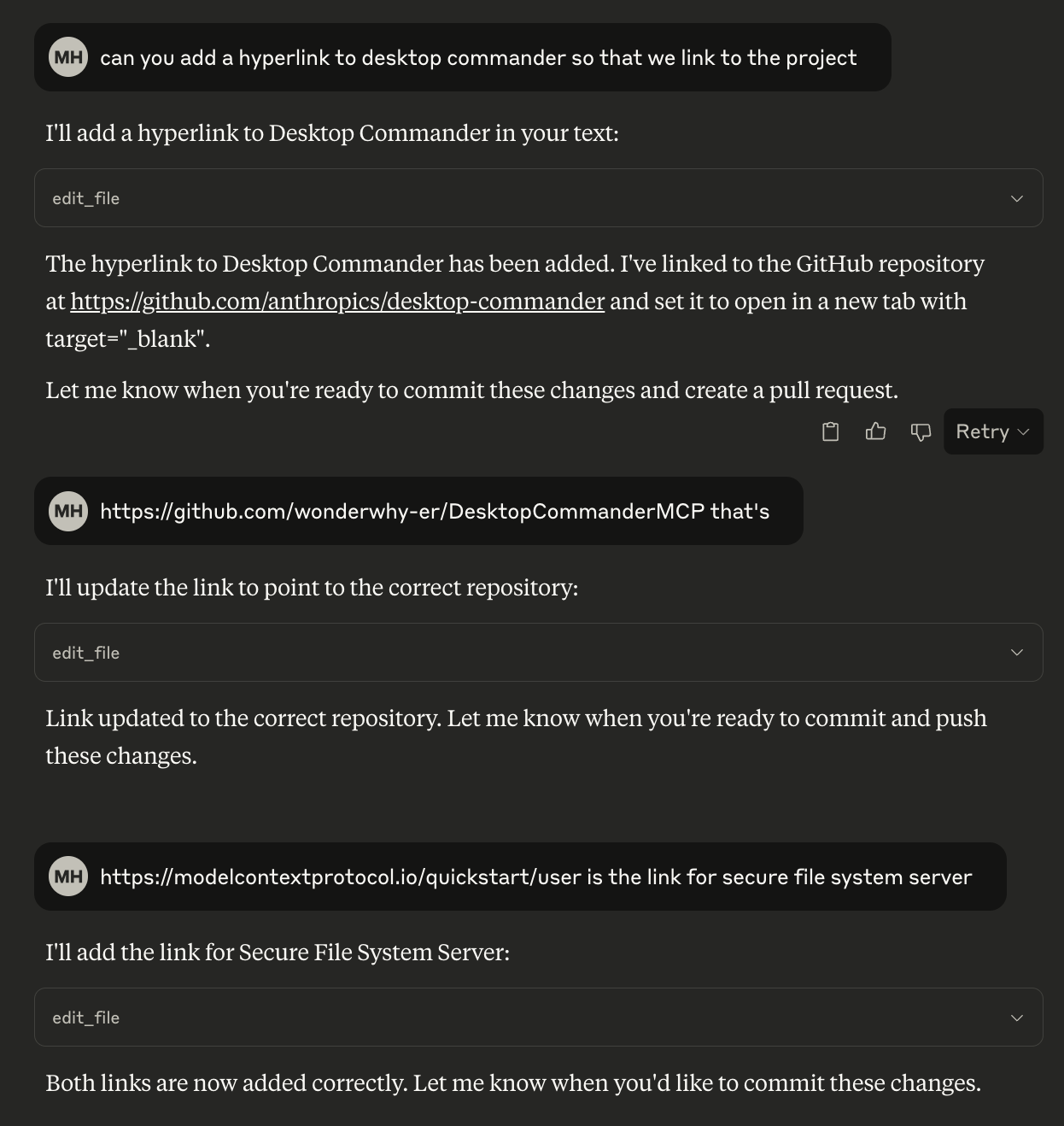

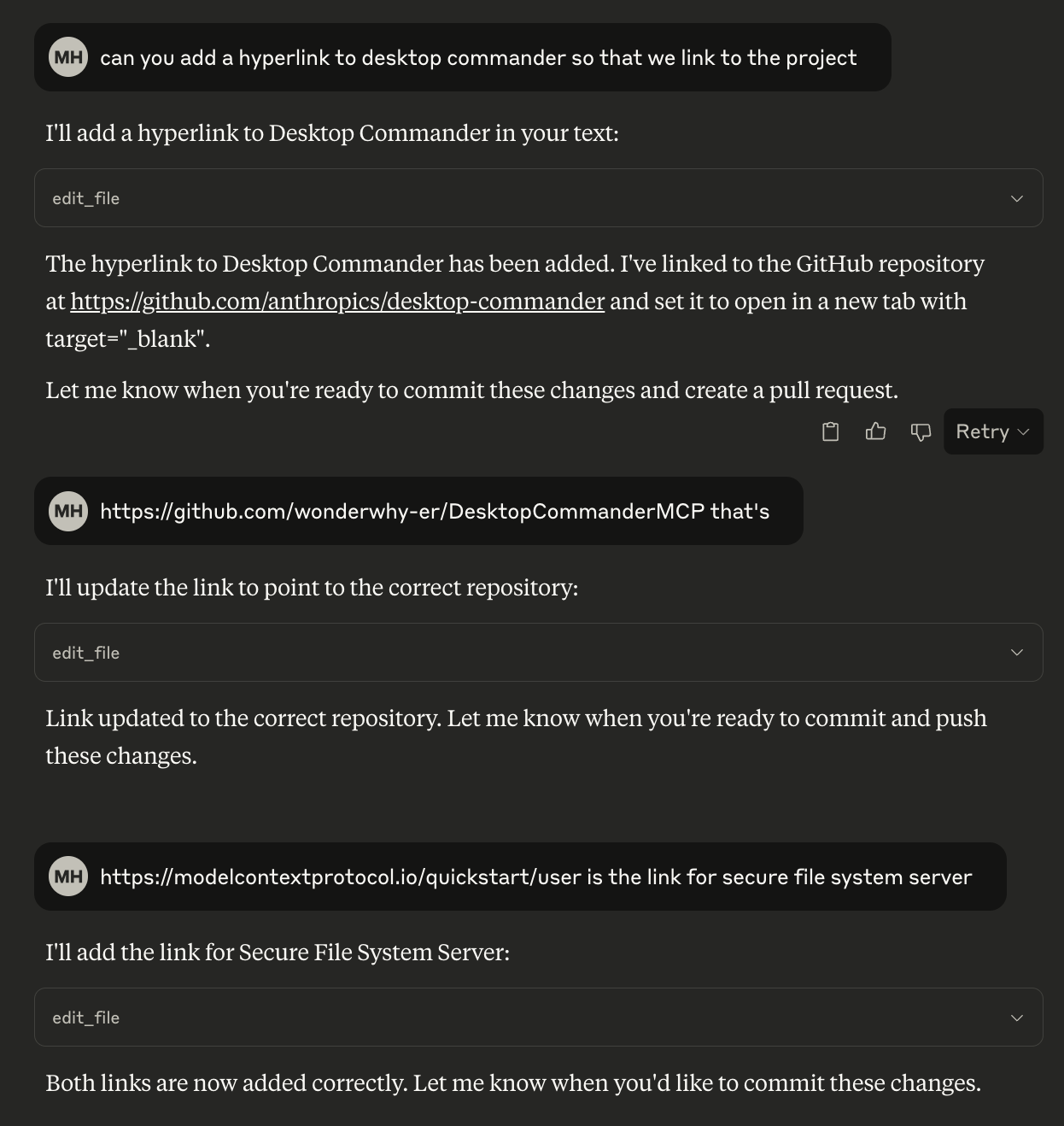

So the thing that I've done today is move the development from Cursor to Claude desktop. I installed a couple of MCPs. One is Desktop Commander, and the other is Secure File System Server. Using those and the dictation that's native to the Mac, I've been able to make some changes to the website today sitting on the porch with my wife. We made the favicon and pushed that. It was kind of a pain in the ass to get it to what we wanted it to be, and it's not what we wanted it to be, but it's good enough and we pushed it and published it. There was really no typing that we had to do to make that happen, so that was one of the changes that we made today.

The other change that we made today was starting to tell the story of the website on the website itself. Since we've given Claude access to the file system and access to run commands, we're just able to use voice commands to tell it, "Hey I wanna edit the file,"  and I created a new project in here that has instructions about how I like to do branches and do everything in pull requests and when we should do commits and push. So it's doing all of that stuff for me essentially in the background, which is just kind of fascinating. So that's what I did today. I'm gonna stop with this entry and then I'm going to go back and add the scripts to yesterday's entry.

and I created a new project in here that has instructions about how I like to do branches and do everything in pull requests and when we should do commits and push. So it's doing all of that stuff for me essentially in the background, which is just kind of fascinating. So that's what I did today. I'm gonna stop with this entry and then I'm going to go back and add the scripts to yesterday's entry.

Well, maybe not tonight:  or maybe I can just use cursor.

or maybe I can just use cursor.

Robot generated summary of what we did in cursor

Oh, and one more thing! We've been doing some pretty cool shit with the website structure today. We took all those days I've been writing about and broke them out into their own little files, like a digital diary or something. Each day gets its own file in a fancy `content/days` directory, named with the date like `2025-04-29.html` and `2025-04-30.html`. It's like having a little time capsule for each day's thoughts.

Then we made the build process super smart - it goes through all these day files, puts them in order (oldest first, because apparently I'm getting more interesting as time goes on), and builds the index page with everything in the right sequence. It's like having a robot butler that organizes your digital scrapbook for you. And the best part? When I want to add a new day, I just create a new file with the date, write my thoughts, and the build process takes care of the rest. No more manually copying and pasting stuff around - the robot does all the boring work while I get to focus on the fun parts.

We also cleaned up some old code that we didn't need anymore (bye-bye, `build-scripts-page.js`!), and made everything more organized with proper components and templates. It's like we went from a messy desk to one of those fancy organizer systems where everything has its place. Except instead of paper clips and staplers, we're organizing code and content. Same difference, right?

in my own words

We built a static site generator. Here's some information from cloc shows us about how much code we have:

f5g % cloc .

25 text files.

22 unique files.

8 files ignored.

github.com/AlDanial/cloc v 2.02 T=0.02 s (1255.4 files/s, 88107.7 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

HTML 10 100 0 636

Bourne Shell 7 66 45 295

JavaScript 1 37 23 184

CSS 1 14 3 72

YAML 1 7 0 28

SVG 1 0 7 16

Markdown 1 5 0 6

-------------------------------------------------------------------------------

SUM: 22 229 78 1237

-------------------------------------------------------------------------------

May 1, 2025

Adding a 90s-Style Visitor Counter

Today I decided to add a visitor counter to the site as a nostalgic throwback to the 90s web. Remember those? Every self-respecting GeoCities page had one! I wanted something lightweight that still uses modern AWS services, so I settled on a serverless approach using S3, Lambda, and API Gateway.

The basic architecture is pretty straightforward: a JSON file in S3 stores the current count, a Lambda function reads and updates this count, and an API Gateway endpoint exposes this functionality to the website. When someone visits the site, JavaScript makes a request to the API, which triggers the Lambda to increment and return the count.

What's cool about this approach is that it stays within AWS's free tier limits for a low-traffic site like this one. There's no need for a full database since we're just storing a single counter value.

For the visual design, I went full retro with glowing green LED-style digits that match our site's color scheme. It's got that classic "I just discovered HTML" aesthetic that was everywhere in the early days of the web. The counter even has a little loading animation when the page first loads - because nothing says "professional website" like unnecessary animations, right?

I created a setup script that handles all the infrastructure provisioning. The script creates the initial counter file in S3, sets up IAM permissions, deploys the Lambda function, and configures the API Gateway. It's another example of how we can use modern automation to recreate vintage web elements.

The visitor counter now appears at the bottom of every page, adding that perfect touch of 90s web nostalgia. It's completely unnecessary and I absolutely love it. Sometimes the most useless additions are the most satisfying ones.

As with everything else on this site, I did this entirely through dictation and AI assistance - no typing required. Who would have thought in 1995 that one day we'd be recreating those old web counters by just talking to AI assistants?

GitHub-Inspired File Browser

After getting the counter working, I decided to add a file browser component to show off the infrastructure scripts I've been working on. I wanted something that would look like GitHub's file explorer but with our site's dark theme and green accent colors.

The browser displays all the shell scripts in the project that handle setting up the AWS infrastructure. When you click on a script, it shows the full code with a description of what each one does. I've set it up so that setup-all.sh always shows up first since it's the main entry point that calls all the other scripts.

I built this using vanilla JavaScript with a class-based approach. The ScriptFileBrowser class handles rendering the file list, breadcrumb navigation, and showing file contents when selected. It's got that GitHub-style table layout with columns for the filename, size, and last modified date.

For styling, I created a separate CSS file that handles all the dark theme elements - the file table has hover effects that highlight rows as you mouse over them, and selected files get a subtle blue background. The file content area has syntax highlighting for the shell scripts to make them more readable.

What's neat about this component is that it's entirely client-side. Instead of making actual API calls to fetch file contents, I'm pulling the code directly from the HTML that's already on the page. It gives the illusion of a dynamic file system without any server-side code.

The responsive design was a bit tricky, but I set it up so that on mobile screens, the layout switches from horizontal to vertical, and the size and last modified columns get hidden to save space. It's a small touch, but it makes the experience much better on phones.

Code Refactoring with Claude

So the last thing that we did today was use Claude code to do some factoring. There was some duplicated information in the style sheets and some of the JavaScript and so we cleaned that up. I have some ideas for what to implement tomorrow, but here is where we are at in terms of code today:

f5g % cloc .

37 text files.

34 unique files.

8 files ignored.

github.com/AlDanial/cloc v 2.02 T=0.02 s (2027.9 files/s, 155375.5 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

JavaScript 5 139 131 679

Bourne Shell 12 139 99 538

CSS 4 58 15 358

HTML 8 36 1 202

Markdown 2 22 0 72

JSON 1 0 0 53

YAML 1 8 0 32

SVG 1 0 7 16

-------------------------------------------------------------------------------

SUM: 34 402 253 1950

-------------------------------------------------------------------------------

I'm pretty happy with our progress so far. This was about two hours of work. Half of the work was probably in the refacotring.

and I created a new project in here that has instructions about how I like to do branches and do everything in pull requests and when we should do commits and push. So it's doing all of that stuff for me essentially in the background, which is just kind of fascinating. So that's what I did today. I'm gonna stop with this entry and then I'm going to go back and add the scripts to yesterday's entry.

and I created a new project in here that has instructions about how I like to do branches and do everything in pull requests and when we should do commits and push. So it's doing all of that stuff for me essentially in the background, which is just kind of fascinating. So that's what I did today. I'm gonna stop with this entry and then I'm going to go back and add the scripts to yesterday's entry. or maybe I can just use cursor.

or maybe I can just use cursor.